In today’s data-driven world, Machine Learning (ML) has become a pivotal element for businesses aiming to gain insights and solve complex challenges. Building an effective ML stack is essential for creating scalable AI solutions that can handle vast amounts of data and continuously improve. This blog will explore how to build the perfect ML stack, outlining each component’s role in the ecosystem, and offering guidance on selecting the right ML infrastructure and tools.

Book a demo to experience the meaningful insights we derive from data through our analytical tools and platform capabilities. Schedule a demo today!

Request a Free DemoTable of Contents

Understanding the ML Stack

A Machine Learning stack refers to the combination of technologies and tools that work together to facilitate the development, deployment, and maintenance of machine learning models. Building the right stack ensures seamless data processing, efficient model training, and scalable AI solutions.

The key components of an ML stack include:

- ML Infrastructure

- ML Tools

- End-to-End ML Pipeline

- Data Science Tools

- Model Deployment Platforms

The Core Components of an ML Stack

1. ML Infrastructure

The foundation of any machine learning solution lies in its infrastructure. ML infrastructure encompasses the hardware and software resources necessary to build and train models. This includes computing power, data storage, and networking capabilities.

- Cloud-Based Infrastructure: Cloud services like AWS, Azure, and Google Cloud offer scalable infrastructure tailored for ML needs.

- On-Premise Infrastructure: Some companies opt for on-premise solutions, providing control and data security but at a higher cost.

The choice between cloud-based and on-premise infrastructure depends on factors like data privacy, scalability, and cost.

2. ML Tools

Machine learning tools are essential for building and optimizing models. These include libraries, frameworks, and other utilities that simplify development.

- Machine Learning Frameworks: Popular frameworks like TensorFlow and PyTorch are widely used for developing ML models. They provide predefined functions to build and train models efficiently.

- Feature Engineering Tools: Tools like Feature tools and Scikit-learn help in transforming raw data into meaningful features that improve model accuracy.

3. Data Science Tools

Data science tools help with the entire data lifecycle—collection, processing, analysis, and visualization. Data preprocessing, feature engineering, and model training are all part of this category.

- Data Preprocessing in ML: Preprocessing tools like Pandas and Dask are critical for cleaning and preparing raw data before it can be fed into an ML model.

- ML Experiment Tracking: Tools like MLflow and Weights & Biases track experiments, keeping a record of changes in data, models, and results.

4. End-to-End ML Pipeline

The End-to-End ML Pipeline ensures the smooth flow of data through different stages of the ML lifecycle. It starts from data collection and ends with model deployment. This pipeline involves the following steps:

- Data Ingestion: Collecting raw data from various sources.

- Data Preprocessing: Cleaning and transforming data to make it usable.

- Model Development: Using tools like TensorFlow or PyTorch to build models.

- Model Training: Training the models using datasets and algorithms.

- Model Deployment: Deploying models into production environments for real-world use.

By automating and integrating these steps, businesses can streamline the ML development process.

| Stage | Tools & Frameworks |

|---|---|

| Data Ingestion | Apache Kafka, AWS Kinesis |

| Data Preprocessing | Pandas, Dask |

| Model Development | TensorFlow, PyTorch, Scikit-learn |

| Model Training | Keras, XGBoost, LightGBM |

| Model Deployment | Kubernetes, Docker, MLflow |

5. Model Deployment Platforms

After training, deploying machine learning models into production is critical for real-time decision-making. ML Deployment Platforms handle the operationalization of models.

- AI Model Serving: This process involves exposing machine learning models as services via REST APIs. Tools like TensorFlow Serving and TorchServe enable this process efficiently.

- Scalable ML Solutions: Platforms like KubeFlow and MLflow ensure that deployed models scale across cloud and on-premise infrastructure.

Choosing the Right AI and ML Frameworks

AI and ML frameworks provide pre-built algorithms and functions, saving developers significant time. Two of the most widely used frameworks include:

- TensorFlow: An open-source framework developed by Google, ideal for both research and production.

- PyTorch: A popular deep learning framework known for its dynamic computation graphs and simplicity, preferred by researchers and developers alike.

Both frameworks offer powerful capabilities, but the choice depends on the specific use case. TensorFlow is more mature for production environments, while PyTorch is often favored for rapid experimentation and research.

Automating the Machine Learning Pipeline with AutoML

Automated Machine Learning (AutoML) tools are transforming the landscape by enabling non-experts to create and deploy models without deep technical knowledge.

- Google Cloud AutoML and H2O.ai allow developers to create high-performing models by automating tasks like feature selection, hyperparameter tuning, and model selection.

By incorporating AutoML, organizations can improve productivity and accelerate the development cycle.

Model Monitoring and Maintenance

Once deployed, models need to be continuously monitored to ensure they remain accurate and effective. Model Monitoring and Maintenance involve tracking model performance, detecting drift, and updating models as necessary.

- Model Drift Detection: Tools like Evidently AI can track the change in data distributions, signaling when a model might need retraining.

- Model Update Automation: Automating the retraining and redeployment process ensures models evolve with changing data trends.

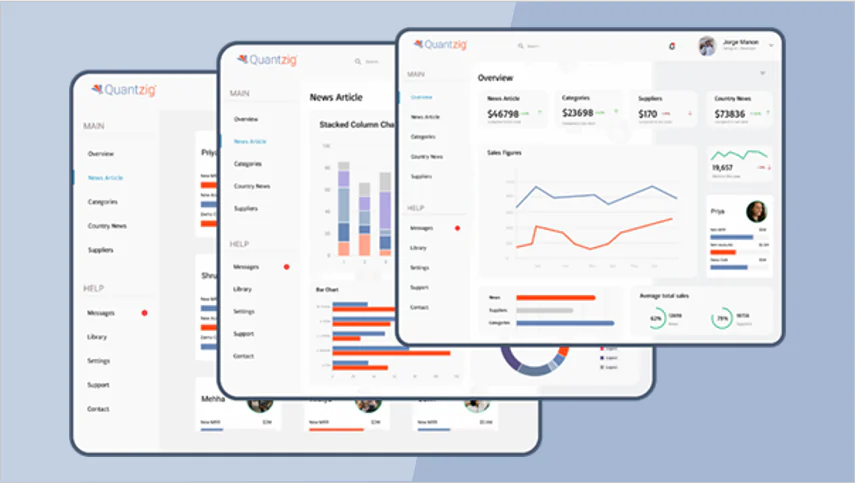

Quantzig’s Services in Building Scalable AI Solutions

Quantzig is a global leader in providing data analytics and AI solutions tailored for businesses across industries. They offer a range of services to help organizations build the perfect ML stack:

- Custom AI and ML Solutions: Quantzig helps businesses design and implement AI models tailored to their specific needs, whether for demand forecasting, recommendation engines, or customer insights.

- Data Science and Analytics Consulting: They provide end-to-end data science consulting, from data preprocessing to model development and deployment.

- AI Integration: Quantzig’s expertise extends to integrating machine learning solutions with existing business processes, ensuring smooth deployment and real-time insights.

- Automated Model Monitoring: They offer advanced solutions for continuous model monitoring, ensuring that models remain accurate and adapt to changing business conditions.

By leveraging Quantzig’s expertise, businesses can create scalable and efficient ML solutions that drive innovation and business growth.

Conclusion

Building the perfect ML stack for scalable AI solutions requires selecting the right mix of infrastructure, tools, and frameworks. From data preprocessing to model deployment and monitoring, each component plays a crucial role in ensuring a seamless machine learning workflow. Integrating AutoML capabilities, leveraging TensorFlow or PyTorch, and ensuring scalable solutions through platforms like Kubernetes or MLflow can accelerate your journey toward a fully optimized ML ecosystem.

By continuously evolving and integrating new tools and techniques, businesses can build powerful, scalable AI solutions that bring substantial value in the rapidly advancing world of machine learning.

📩 Get in Touch Today

Fill out our contact form to discover how we can help you streamline your reporting, enhance decision-making processes, and unlock new opportunities for growth.