In today’s fast-paced digital world, businesses are increasingly relying on artificial intelligence (AI) and machine learning (ML) to gain insights, improve decision-making, and stay competitive. However, despite the immense potential of AI models, many organizations struggle to seamlessly integrate these models into their operations and scale them for continuous value generation. This is where MLOps, or Machine Learning Operations, comes in—a crucial discipline that bridges the gap between AI models and tangible business value.

Book a demo to experience the meaningful insights we derive from data through our analytical tools and platform capabilities. Schedule a demo today!

Request a Free DemoTable of Contents

What is MLOps?

MLOps refers to the practice of applying DevOps principles to machine learning models, facilitating collaboration between data scientists, IT professionals, and business stakeholders. It aims to streamline the end-to-end lifecycle of machine learning models, from development and deployment to monitoring and continuous improvement.

At its core, MLOps enables the automation and optimization of AI and ML workflows, allowing businesses to deploy and manage models efficiently at scale. The goal is to ensure that machine learning models deliver consistent value and are aligned with business objectives over time.

Key Components of the MLOps Stack

The MLOps stack encompasses the tools and technologies required to build, deploy, monitor, and manage machine learning models. It involves various components that work together to automate the processes, ensuring smooth integration and continuous performance.

MLOps Tools

MLOps tools play a vital role in simplifying and automating various stages of the machine learning lifecycle. These tools help in everything from data preprocessing and model training to deployment and monitoring. Some popular MLOps tools include:

- Kubeflow: An open-source platform designed for deploying, monitoring, and managing ML models on Kubernetes.

- TensorFlow Extended (TFX): A production-ready framework that simplifies building scalable machine learning pipelines.

- MLflow: An open-source platform that tracks machine learning experiments, packages code into reproducible runs, and manages deployment.

- DVC (Data Version Control): A tool that allows versioning of datasets and models, enabling reproducibility in ML projects.

Machine Learning Operations Stack

The machine learning operations stack includes a set of interconnected layers that facilitate seamless development and deployment of ML models. This stack typically includes:

- Data Pipeline: Manages the flow of data from source to model input, ensuring that clean, reliable data is available for training.

- Model Training and Testing: Involves selecting algorithms, training models, and validating their accuracy and performance.

- Model Deployment: The deployment process involves releasing the trained model into a production environment where it can make predictions and deliver value.

- Model Monitoring and Maintenance: Ensures that the deployed model performs as expected and remains aligned with business objectives. This includes tracking performance, identifying drift, and retraining models as necessary.

The MLOps Pipeline: A Seamless Workflow for Machine Learning Models

The MLOps pipeline is the end-to-end sequence of automated steps involved in training, deploying, and maintaining machine learning models. Each step in the pipeline is designed to optimize the model development process and ensure its operational efficiency.

Steps in an MLOps Pipeline

- Data Collection and Preprocessing: Collect data from various sources, clean it, and prepare it for model training.

- Model Development: Choose the right machine learning algorithms, train the model, and tune its hyperparameters.

- Model Validation and Testing: Test the model’s performance using validation datasets and refine it for better accuracy.

- Model Deployment: Once the model is ready, deploy it into production systems for real-time decision-making.

- Model Monitoring: Track the model’s performance in production, identify potential issues like drift, and initiate retraining when necessary.

- Model Versioning: Maintain multiple versions of models to ensure compatibility and easy rollback if issues arise.

MLOps Architecture: The Backbone of AI Operations

The MLOps architecture defines the structure that supports the implementation of machine learning operations across an organization. It consists of several key components, including:

- Data Storage and Management: Ensures that large volumes of data can be efficiently stored, accessed, and processed.

- Model Repository: A centralized location where models are stored, versioned, and managed.

- Automation and Orchestration: Orchestrates the deployment and operation of ML models across various environments, ensuring seamless collaboration.

- Monitoring and Analytics: Provides insights into the model’s performance, allowing stakeholders to make informed decisions.

Benefits of a Strong MLOps Architecture

- Scalability: Automates workflows, allowing models to scale efficiently in response to increasing data and business needs.

- Consistency: Reduces human error and ensures that processes are repeatable and reliable.

- Collaboration: Breaks down silos between data scientists, IT, and business teams, fostering a culture of collaboration.

- Speed: Accelerates the deployment of AI solutions, enabling organizations to respond faster to market demands.

Continuous Integration and Deployment (CI/CD) in MLOps

One of the fundamental practices in MLOps is Continuous Integration and Deployment (CI/CD). In the context of machine learning, CI/CD involves continuously integrating new changes into the codebase, testing them, and deploying updates to the production environment. This practice ensures that models can be quickly updated and improved based on real-time data and feedback.

- CI: Focuses on automating the process of merging changes, running tests, and ensuring that new code does not break the system.

- CD: Involves automating the deployment process, making sure that updated models are seamlessly integrated into production.

The Role of CI/CD in MLOps

- Faster Releases: CI/CD allows teams to release new versions of models and software quickly and reliably.

- Error Reduction: Automated tests catch errors early, reducing the likelihood of issues in production.

- Improved Collaboration: CI/CD workflows help streamline communication between data scientists, engineers, and business stakeholders.

Model Deployment Automation: Enhancing Efficiency

Model deployment automation is a crucial aspect of MLOps that ensures machine learning models are deployed in production environments with minimal manual intervention. This not only saves time but also minimizes errors during the deployment process.

Key Aspects of Model Deployment Automation

- Automation Tools: Use tools like Kubeflow and TensorFlow Extended to automate the deployment process.

- Containerization: Tools like Docker and Kubernetes allow models to be packaged in containers, making them easy to deploy and scale.

- Version Control: Implement versioning to ensure that only tested and approved models are deployed.

Model Monitoring in MLOps: Ensuring Performance

Model monitoring in MLOps is essential for ensuring that deployed models continue to perform well in real-world scenarios. Without monitoring, models can degrade over time due to changes in data or external factors.

Key Monitoring Activities

- Performance Tracking: Track the model’s accuracy, precision, and other metrics in real time.

- Model Drift Detection: Identify when a model’s performance begins to degrade due to changes in data distributions.

- Model Retraining: Trigger retraining processes based on new data or identified performance issues.

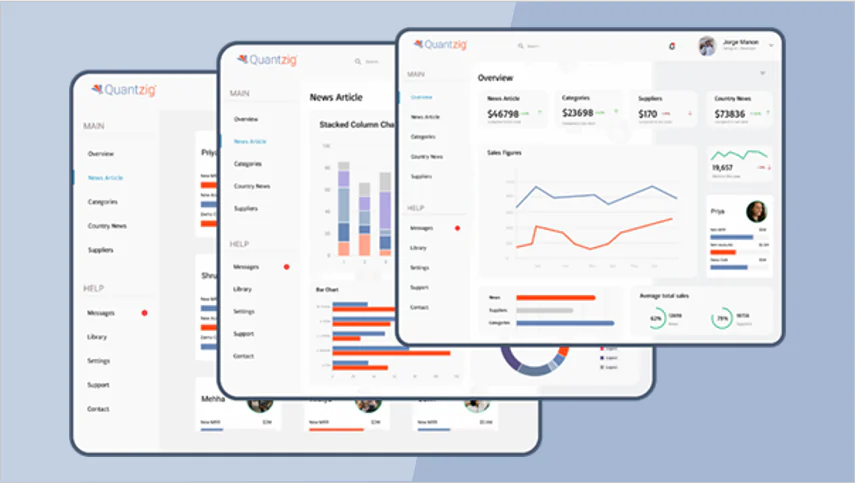

Quantzig’s Services in MLOps

Quantzig, a leader in advanced analytics, provides comprehensive MLOps services designed to help businesses scale their AI and machine learning initiatives. They offer:

- End-to-End MLOps Lifecycle Management: From model development and deployment to monitoring and optimization, Quantzig manages the entire MLOps lifecycle.

- Cloud-Based MLOps: Quantzig leverages cloud platforms like AWS, Azure, and Google Cloud to ensure scalable and secure MLOps solutions.

- Model Versioning and Management: They specialize in managing different versions of models to ensure consistent performance and seamless updates.

- Data Pipeline and Automation: Quantzig implements robust data pipelines that automate the flow of data, ensuring high-quality inputs for model training.

Conclusion

MLOps plays a pivotal role in making machine learning models more accessible, scalable, and aligned with business goals. By automating the lifecycle of AI and machine learning models—from development to deployment and monitoring—organizations can unlock the true potential of their AI investments. With the right MLOps stack, tools, and platforms, businesses can scale AI models effectively and bridge the gap between AI capabilities and real business value. For companies looking to navigate the complexities of MLOps, partnering with experts like Quantzig can streamline this journey and accelerate success.

📩 Get in Touch Today

Fill out our contact form to discover how we can help you streamline your reporting, enhance decision-making processes, and unlock new opportunities for growth.