Data veracity refers to the accuracy and trustworthiness of data that is being analyzed. The quality of data is influenced by various factors, such as its source, the methods used for its collection, and how it is analyzed.

The veracity of data determines its reliability and relevance. Low veracity data typically contains a significant amount of irrelevant or “noisy” information, which does not contribute value to an organization’s analysis. In contrast, high veracity data is rich with valuable and meaningful records, which positively impact the overall analysis and decision-making process.

Simply accumulating large amounts of data doesn’t guarantee its accuracy or cleanliness. For example, data gathered from social media should ideally be extracted directly from the platform, rather than through third-party systems, to ensure its quality isn’t compromised. To maximize the effectiveness of data, it must remain consolidated, cleansed, consistent, and up-to-date, allowing businesses to make informed and strategic decisions.

Ensuring data accuracy, data integrity, and data consistency is paramount. In this blog, we will explore the importance of data veracity in the age of Big Data, the challenges involved, and effective strategies for maintaining data quality and data trustworthiness.

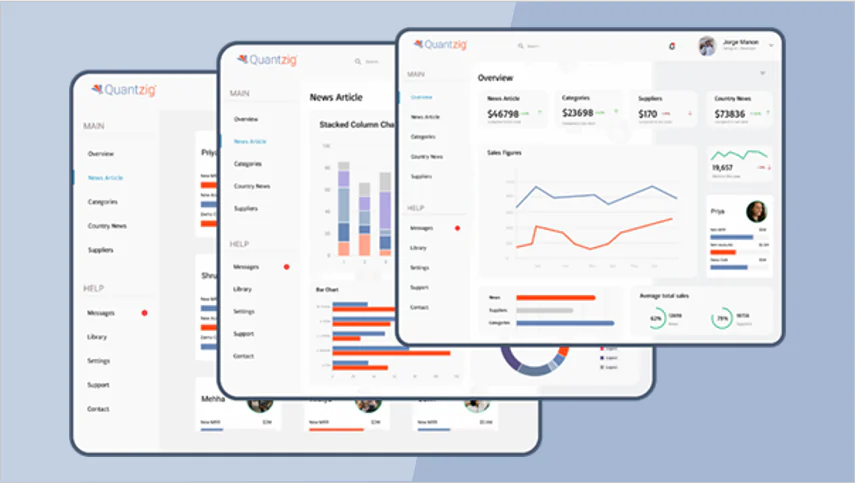

Book a demo to experience the meaningful insights we derive from data through our analytical tools and platform capabilities. Schedule a demo today!

Request a Free DemoTable of Contents

What is Veracity in Big Data?

Veracity in Big Data refers to the reliability, accuracy, and quality of the data. It encompasses:

- Data Accuracy: The degree to which data is correct and error-free.

- Data Integrity: The consistency and trustworthiness of the data over time.

- Data Consistency: Ensuring that data remains uniform and compatible across different sources and systems.

- Data Reliability: The ability to consistently trust data to provide valid insights for decision-making.

In the context of Big Data, ensuring data veracity is critical because businesses rely on data to drive everything from operational strategies to customer engagement. If data is flawed or unreliable, the decisions based on it will be equally flawed.

The Importance of Data Veracity in Big Data

As organizations continue to adopt Big Data technologies to power their operations, the quality of that data becomes central to success. Here are some key reasons why data veracity is so important:

- Business Decision-Making: Accurate and reliable data leads to better decision-making. When data is accurate, decisions based on that data are more likely to produce positive results.

- Customer Insights: Consistent and high-quality data is essential for understanding customer behavior, preferences, and needs. Inaccurate or inconsistent data can lead to misinterpretation of customer sentiment.

- Regulatory Compliance: Many industries, such as healthcare and finance, are highly regulated. Data errors or inconsistencies could lead to compliance issues, risking hefty fines or legal action.

- Operational Efficiency: Ensuring that the data powering processes and automation is accurate means better operational outcomes—such as cost savings and faster decision-making.

- Trustworthiness of Predictions: With Big Data being used for predictive analytics and machine learning, ensuring data trustworthiness is key to reliable forecasts.

What Are the Sources of Data Veracity?

Data veracity refers to the accuracy, reliability, and trustworthiness of data. Several factors can impact the veracity of data, leading to potential inaccuracies or inconsistencies. Below are key sources of data veracity:

- Bias: Bias occurs when certain data elements are overrepresented, leading to inaccurate or skewed insights. This can happen when statistical calculations are influenced by biased data inputs.

- Software Bugs: Bugs in software or applications can distort or miscalculate data, affecting the quality and accuracy of the information.

- Noise: Noise refers to irrelevant or extraneous data that doesn’t add value. High noise levels increase the need for data cleaning to extract meaningful insights.

- Abnormalities: Anomalies or outliers are data points that significantly differ from the norm. These can highlight issues such as fraud, like detecting unusual credit card transactions.

- Uncertainty: Uncertainty arises from ambiguous or imprecise data. It often involves data points that deviate from expected or intended values, leading to a lack of clarity.

- Data Lineage: Data lineage involves tracking the origin and movement of data across various systems. If data sources are unreliable or difficult to trace, it can impact the overall trustworthiness of the data.

These sources can significantly influence the quality of data, making it essential to address them for accurate and reliable data analysis.

Big Data Challenges Impacting Data Veracity

As organizations handle massive volumes of data, they face numerous Big Data challenges that threaten data veracity. Here are some of the common ones:

| Big Data Challenge | Impact on Data Veracity |

|---|---|

| Data Volume | Handling vast amounts of data increases the likelihood of errors, inconsistencies, and incomplete datasets. |

| Data Variety | Data comes in different formats—structured, unstructured, and semi-structured—making it challenging to ensure uniform quality. |

| Data Velocity | Real-time data feeds and fast-paced data generation can introduce errors before they can be detected or corrected. |

| Data Integration | Combining data from multiple sources with varying formats and standards can introduce inconsistencies and discrepancies. |

| Human Error | Manual data entry or misinterpretation can lead to flawed data that impacts decision-making. |

| Data Bias | Bias in data collection or analysis can compromise data integrity and result in misleading insights. |

These challenges make it difficult to maintain data consistency, data reliability, and overall data quality across the board.

Strategies to Ensure Data Veracity

Now that we understand the importance of data veracity and the challenges in Big Data, let’s look at some practical strategies to ensure data stays accurate, reliable, and consistent:

1. Data Governance: Establishing a Framework for Trustworthy Data

Data governance refers to the processes and policies that ensure data is managed, protected, and used effectively. A robust data governance framework is crucial for ensuring data accuracy, data integrity, and data consistency.

- Data Quality Standards: Define and enforce quality standards for data, including accuracy, consistency, completeness, and timeliness.

- Data Stewardship: Assign roles to individuals or teams responsible for overseeing data quality and ensuring compliance with governance policies.

- Metadata Management: Ensure proper documentation and classification of data assets to maintain data trustworthiness.

2. Data Cleansing: Scrubbing the Data for Accuracy

Data cleansing is the process of identifying and rectifying errors, inconsistencies, and missing values in datasets. This is one of the most critical steps in ensuring data veracity.

- Remove Duplicates: Duplicated records can distort analysis, so it’s essential to remove them during the data cleaning process.

- Fill Missing Data: Incomplete data can hinder analysis, so missing values should be filled with accurate, reliable estimates or flagged for further investigation.

- Standardize Formats: Ensuring that data is in a consistent format helps prevent errors in analysis and decision-making.

3. Advanced Analytics and AI for Data Validation

Leveraging AI and machine learning tools can automate the process of detecting and correcting data quality issues.

- Anomaly Detection: Machine learning algorithms can identify outliers or anomalies in data, which can indicate errors or fraud.

- Automated Data Validation: AI can be used to verify the consistency and quality of data in real-time, ensuring its reliability before it’s used for analysis.

- Predictive Modeling: AI and predictive analytics tools can help organizations forecast data trends, ensuring that data used in decision-making is up-to-date and reliable.

4. Continuous Monitoring and Auditing

Continuous monitoring and regular data audits ensure that data remains accurate and consistent over time.

- Real-Time Monitoring: Implement systems that monitor data feeds in real-time to flag any issues as they arise.

- Scheduled Audits: Perform regular audits to check for errors, inconsistencies, and changes in data patterns that could impact veracity.

5. Data Provenance: Track the Journey of Data

Tracking the provenance or origin of data helps ensure its accuracy and consistency. By understanding where data comes from and how it has been transformed over time, organizations can validate its integrity.

- Data Lineage: Use data lineage tools to trace the path of data from its origin to its final destination. This makes it easier to identify errors or inconsistencies and correct them at the source.

- Transparent Processes: Ensure that the processes used to collect and analyze data are transparent and auditable to foster accountability.

6. Employee Training and Data Quality Awareness

Organizations need to build a culture of data quality, where employees are educated about the importance of maintaining data veracity.

- Training Programs: Regular training on data quality principles, tools, and best practices can help reduce human errors in data entry and analysis.

- Incentivize Accuracy: Encourage employees to prioritize data accuracy and data consistency by recognizing and rewarding good data stewardship.

Quantzig’s Approach to Ensuring Data Veracity

At Quantzig, we understand the importance of data veracity in Big Data and offer comprehensive data management solutions to ensure the highest quality of data for our clients. Our services are tailored to address the specific needs of each organization, ensuring data accuracy, data integrity, and data consistency at every step.

-

Data Quality Solutions:

Our team of experts works closely with clients to implement custom solutions for cleansing, validating, and enriching their datasets, ensuring data trustworthiness and reliability.

-

AI-Driven Data Management:

We leverage AI and machine learning tools to automate data validation, detect anomalies, and optimize predictive models, ensuring that businesses can trust their data.

-

Data Governance Consulting:

We help organizations establish a solid data governance framework, implementing policies and standards that ensure data accuracy and compliance with industry regulations.

Experience the advantages firsthand by testing a customized complimentary pilot designed to address your specific requirements. Pilot studies are non-committal in nature.

Request a free pilotConclusion: Future-Proofing Your Data Strategy

In the era of Big Data, ensuring data veracity is not optional—it’s essential for success. Organizations that prioritize data quality, data integrity, and data accuracy will be better equipped to leverage the full potential of their data to drive innovation, improve decision-making, and gain a competitive advantage. With data governance, AI tools, and continuous monitoring, businesses can safeguard their data’s trustworthiness and ensure consistent, reliable insights.

By partnering with experts like Quantzig, businesses can ensure their data is not only abundant but also accurate, reliable, and ready for analysis. Data veracity is the foundation of actionable insights—build it strong, and your business will thrive.

Get started with your complimentary trial today and delve into our platform without any obligations. Explore our wide range of customized, consumption driven analytical solutions services built across the analytical maturity levels.

Start your free trial