Table of Contents

Overview of Building Data Pipelines:

Building data pipelines is a crucial step in ensuring structured, reliable, and timely data movement across various systems. A well-designed data pipeline enables organizations to process large datasets, ensure data accuracy, and optimize analytics workflows.

This guide explores the fundamentals of building data pipelines, highlights best practices, and showcases how Quantzig’s expertise helps enterprises implement scalable, automated, and real-time data pipelines to unlock actionable insights.

Book a demo to experience the meaningful insights we derive from data through our analytical tools and platform capabilities.

Request a DemoWhat is a Data Pipeline?

A data pipeline is a structured process that automates the flow of data from multiple sources to a destination, such as a data warehouse or analytics platform. It consists of various stages, including:

- Data Ingestion – Collecting raw data from diverse sources such as databases, APIs, IoT devices, and logs.

- Data Processing – Cleaning, transforming, and structuring data for analysis.

- Data Storage – Storing processed data in warehouses, lakes, or other repositories.

- Data Analytics & Visualization – Delivering insights through business intelligence (BI) tools and dashboards.

A well-optimized data pipeline ensures real-time or batch data processing with minimal latency and enhanced accuracy.

Benefits of a Well-Designed Data Pipeline

| Benefit | Description |

| Enhanced Data Quality | Reduces errors and inconsistencies in datasets |

| Faster Decision-Making | Real-time processing enables quick insights |

| Scalability | Handles increasing data volumes efficiently |

| Automation & Efficiency | Reduces manual effort, improving workflow productivity |

| Improved Security | Ensures compliance with data governance standards |

Key Components of a Data Pipeline

| Component | Description |

|---|---|

| Data Sources | CRM, ERP, IoT, cloud platforms, transactional databases |

| Data Ingestion | Batch or real-time data collection via APIs, ETL tools |

| Processing Engine | Cleanses, transforms, and prepares data for analysis |

| Storage | Cloud data lakes, warehouses (e.g., AWS Redshift, Snowflake) |

| Analytics Layer | BI tools, ML models, dashboards for insights |

| Orchestration | Automation tools (e.g., Apache Airflow, AWS Glue) to manage workflows |

Steps to Building a Scalable Data Pipeline

1. Define Business Objectives

Before building a data pipeline, identify the data sources, volume, and the end goal. Whether it’s real-time analytics, machine learning, or reporting, aligning pipeline architecture with business needs ensures optimal performance.

2. Choose the Right Data Ingestion Strategy

There are two primary data ingestion methods:

- Batch Processing – Data is collected and processed in predefined intervals (e.g., daily reports).

- Real-time Processing – Continuous data streaming for instant decision-making (e.g., fraud detection in banking).

3. Implement Data Transformation & Cleaning

Raw data needs cleansing and transformation before analysis. This involves:

- Removing duplicates and inconsistencies.

- Normalizing and structuring data.

- Handling missing values.

4. Select an Optimal Storage Solution

Choosing the right storage depends on the use case:

| Storage Type | Use Case |

| Data Warehouse | Structured data for analytics (e.g., Snowflake) |

| Data Lake | Unstructured and semi-structured data storage |

| Hybrid Storage | Combines warehouse and lake for flexibility |

5. Automate Pipeline Orchestration

Automation tools streamline data workflows and reduce manual intervention. Common tools include:

- Apache Airflow – Open-source orchestration tool for scheduling and monitoring.

- AWS Glue – Cloud-based ETL (Extract, Transform, Load) service.

- Databricks – Optimized for big data and AI workflows.

6. Ensure Data Quality & Security

Data accuracy and security are critical for reliable insights. Strategies include:

- Implementing data validation rules to detect anomalies.

- Encrypting data during storage and transit.

- Applying role-based access control (RBAC) to restrict unauthorized access.

7. Monitor & Optimize Performance

Regularly track pipeline performance using:

- Latency metrics – Time taken for data to move through the pipeline.

- Error logs – Identifying and troubleshooting failures.

- Scaling strategies – Adapting to growing data volumes with cloud-based solutions.

How Quantzig Helps in Building Data Pipelines

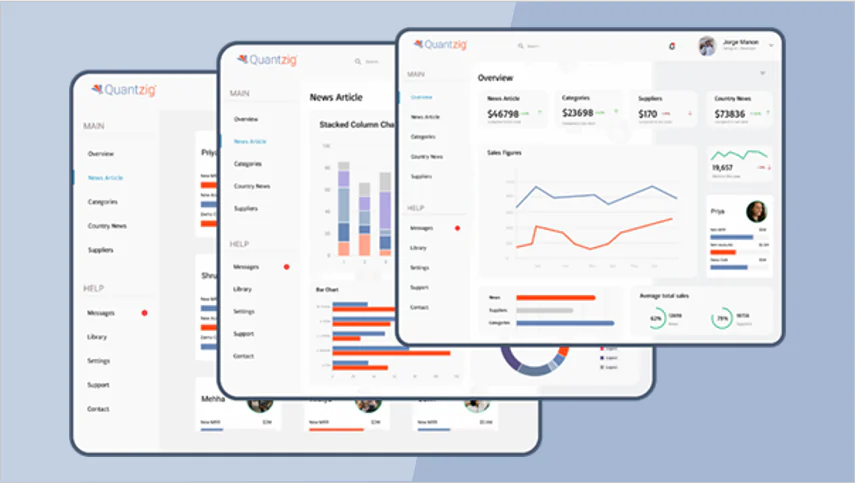

Quantzig specializes in designing and implementing scalable, automated, and real-time data pipelines that empower businesses with seamless data flow and enhanced analytics. By leveraging cutting-edge technologies and industry best practices, Quantzig helps enterprises unlock the true potential of their data and make informed decisions in real-time.

Having a well-structured data pipeline is crucial for driving operational efficiency and gaining actionable insights. With Quantzig’s expertise in building data pipelines, businesses can achieve real-time data integration, improved decision-making, and scalable analytics capabilities.

Experience the advantages firsthand by testing a customized complimentary pilot designed to address your specific requirements. Pilot studies are non-committal in nature.

Request a pilot

Conclusion

Building data pipelines is a foundational step for businesses aiming to harness the power of data for informed decision-making. A well-architected pipeline not only ensures seamless data integration but also enhances processing speed, accuracy, and security. By leveraging modern automation tools and cloud-based solutions, organizations can optimize their data workflows for real-time insights.

Quantzig’s expertise in building data pipelines empowers enterprises to design scalable, automated, and AI-driven solutions that drive business growth. Whether you need real-time data processing or a robust data architecture, we help you unlock the full potential of your data.